Conversion Optimisation

1.08.2022

14 minutes read

How do A/B tests work, what are they, and how to monitor their progress? [FREE GUIDE]

Exactly 53 years ago, Neil Armstrong said “That's one small step for man, one giant leap for mankind”. The same happens to A/B tests.

List of Contents

Exactly 53 years ago, Neil Armstrong said “That's one small step for man, one giant leap for mankind”. The same happens to A/B tests. One small change on your site may mean an enormous improvement in conversion to your business.

What is A/B testing?

As a marketer, whenever you are preparing content, you probably ask yourself a question. Which version will generate more interaction with users and provide a more pleasing user experience? This inquiry might refer to headings, copywriting, CTA button, email marketing, graphics, marketing campaigns, and every possible content you have created.

But wait! You don’t have to rely on your gut feeling or on your boss's light suggestion anymore. A/B tests give you an opportunity to check which version converts better. You can run as many experiments as you like in various configurations. It allows you to see how good or bad your ideas are and choose a winning variation.

How A/B testing works?

A/B testing allows comparing two different versions or even running multivariate tests of one particular digital product. A/B testing, also known as a split test, divides visitors randomly into 2 groups (50/50), and presents them with two different versions of created content. Based on performance metrics, you can then determine which version performs better, attracts more users, generates more conversions, and eventually turns visitors into leads.

Who should run A/B tests?

Rome wasn't built in a day. The same goes for all digital products. No matter how efficient the R&D and tests phase has been, there is still one indicator that might impact it in a way you didn't think about. HUMAN FACTOR.

In a perfect world, your users will go through the exact journey that has been designed for them. A bit like Hansel and Gretel following the traces of crumbs. But we are here, and we know that customers don't behave linearly. And each audience behaves differently. This applies to all businesses: eCommerce, SaaS, travel, entertainment, service, and enterprise.

From the previous paragraphs, you can assume that marketers are a referral group that should use A/B testing to improve conversion rate. But they are not the only ones. Who else should also run A/B tests?

- Product or service manager,

- UX analyst,

- B2B and B2C businesses,

- eCommerce.

Benefits of A/B testing

Developing innovative businesses requires smart choices and recalculating everything constantly. A/B testing gives a chance to make more data-driven decisions.

Finding users' pain points

User Experience never has been as important as right now. The market is over-saturated with suppliers, services, and online shops. The winner is not the one who has the lowest price, but the one whose UX is flawless.

Why? Because positive UX in e-commerce means 3x more customer visits. On the other hand, more than 88% of e-Commerce shoppers rarely come back to an online store after a bad customer experience. And 51% of traffic is made up of returning users. A/B tests allow you to spot those pain points and bottlenecks and optimize conversion.

Conversion rate optimization (CRO)

One way to optimize the conversion rate is to run an A/B test. Quantitative data contain information on user behavior – what they do, behavioral patterns, and how they convert. On the contrary, qualitative data is like a 3-years old toddler who never stops asking “why” questions.

So while quantitative research may tell you the percentage of abandoned carts, qualitative research would tell you what caused users to leave them.

Improve your conversion rate with A/B testing

Combining quantitative and qualitative research lets you come up with various hypotheses to test. But how to know if it’s a win? The answer is simple: A/B testing.

Split target audience into two random groups. The first gets the actual version; the second, the new one. Track the relative performance of each group, and you’ll see which gives the winning variation.

Achieving business goals

If you have time and are willing to test everything, you are welcome to do it. But it's not 1999 to run a tremendous number of A/B tests and sink into a sea of DATA SPAM.

During A/B testing, focus on data only relevant to your business goal. Regardless if it's a purchase, form submission, file download, registration, newsletter subscription, call to action interaction, scroll depth e.t.c.

How do you analyze and monitor the progress of A/B tests?

One of the crucial components of A/B testing is monitoring the progress and analyzing it. Without them, you won’t draw the right conclusions.

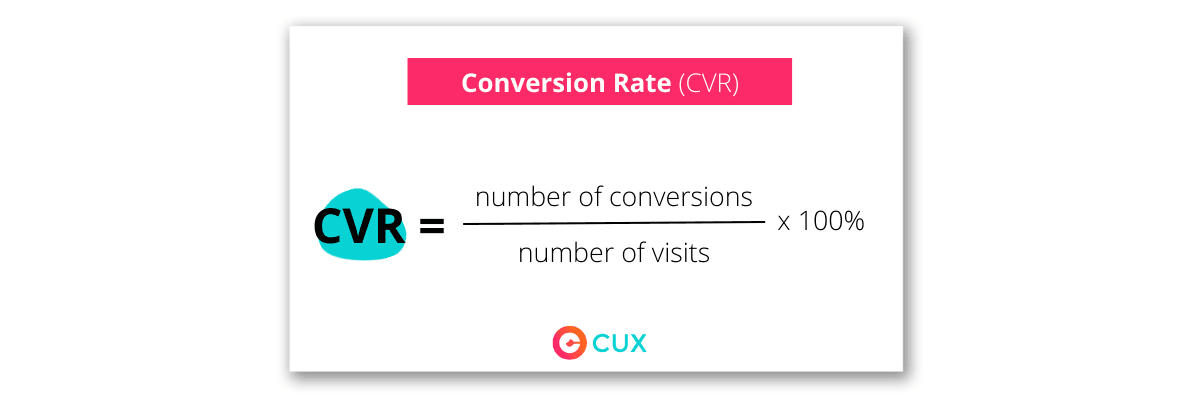

1. Analyze results by checking goal metrics

At the stage of designing the experiment, settle your goal metrics. Commonly, it’s Conversion Rate (CRV), Click-Through Rate (CTR), and Click-Through Probability (CTP). But don’t hesitate to choose a different one.

By looking at numbers, you’ll be able to pull out first conclusions. But to be 100% sure, the winning variation must be of statistical significance.

2. Statistical significance

Statistical significance proves which test results overall might improve conversion rate and which have not. Based on generated data from the A/B test, statistical significance is able to select a winning variation and attribute it to a specific cause. The more elevated degree of statistical significance is, it’s more likely that the observed relationship is not a coincidence. That is why many tools use a 90% to 95% confidence rate.

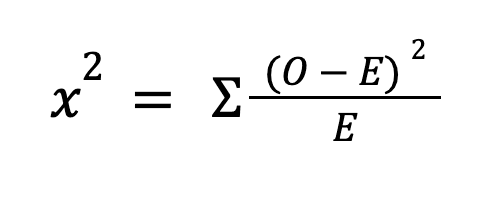

Chi-squred formula for statistical significance:

Σ = sum

O = observed, actual values

E = expected values

If you want to learn more about statistical significance, click here.

3. Deeper data analysis

First lessons learned, but why not to check data for further insights like visitor and device, type or traffic source. This information may shed new light on the results. Also, you will be able to define your buyer’s persona and their habits.

- Desktop, mobile, or tablet—which device performed best?

- New visitors versus the old ones - who converted more?

A/B testing tools

Currently, a lot of analytics and A/B testing tools, help you run A/B tests and monitor the progress. Here you will find an overview of a few of them.

Google Analytics

It is the most common analytics tool, especially right now when GA4 is just around the corner. Google Analytics lets you measure your website traffic metrics like conversion rate, get insights about your website visitors (buyer personas), or map your customer journey.

cux.io

CUX is the first-ever UX & Analytics Automation Tool that allows you to improve conversion rates immediately, turn frustrated users into happy ones, and build a significant market advantage.

Thanks to pre-analyzing data, and detecting the behavioral patterns on Heatmaps, and Visit Recordings, you easily find spots to improve and test them.

cux.io can be integrated with Google Analytics and enclose a free trial version, that contains all features.

Optimizely

Optimizely is a tool for testing and optimizing the customer experience and their digital journey. Users can not only run A/B tests but also manage tasks, work campaigns, content, or run e-commerce shops.

Abtesting.ai

With abtesting.ai you can easily find A/B testing elements and suggested changes. The tool uses AI to choose the best combinations of experiments.

What should you A/B test on your site?

CTA button

A call-to-action button can be a real eye-catcher. It's the most important piece of your website, landing page, or e-commerce store. Why? Because pressing it means customers have purchase intentions.

A perfect CTA button must be visible, easy to find, and clear about the action you want. Like “TRY 30 days free” or “Sign up with email”. You can launch an A/B test experiment to determine what’s the best background color, shape, anchor text, or even a proxy thing like font size or style.

Headings

The average user spent 55 seconds on a page. That means you have less than a minute to catch their interest. Headings are the first thing that visitors usually read. If it is not what they were looking for, users will abandon your page in less than 55 seconds. Even if your website is designed in accordance with the art of UX & UI, current trends, and, an elaborate CTA button. Without a good copy, it won't fulfill its task.

Run A/B test on different versions of headings to see which one works best. It can be a missing element on the road to conversion optimization.

Copy

The copy is what immediately follows the headline. It should be something that complements the headline’s message and expands it a bit.

Graphics

Adding a proper image or graphic to your website, landing page, blog post or marketing campaign can help you increase the conversion rates. People are visual learners, and customers are no different: they want to see a product before they purchase it.

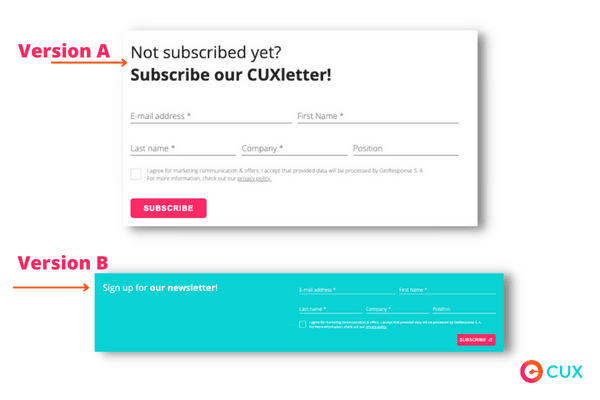

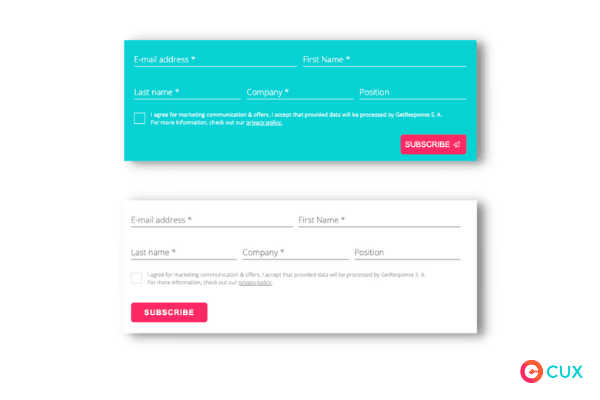

Forms

Experts agree that forms ought to contain only basic fields to fill. Users are then more likely to share their contact details. But that is not the only thing that you can put through the A/B test. You also might test:

- form color,

- submit button,

- placement,

- placeholder text.

Type of content

Reading text requires a lot of commitment from users. I know it sounds ridiculous, but it's true. So why not try content which is easier to consume and hence has the potential to yield you a higher return on your investment? I'm talking about video and infographics.

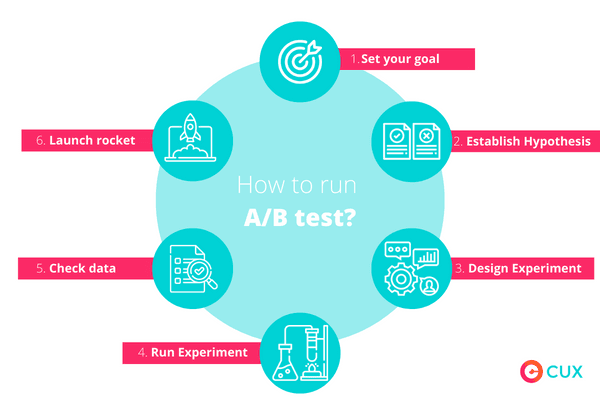

How do you run A/B tests?

You already know what is A/B testing, who should do them, and what you can test. So now it’s time to find out how to run the A/B test.

Running an A/B test is like a marathon. Taking participation is one thing, but achieving a goal or winning is another. Dedicated workouts, a balanced diet tailored to the need of the body, the right choice of exercise, and often cooperation with physiotherapists. It is an effort you have to put in to reach statistical significance.

From now on, I will be your A/B test coach. For the best result, make use of a walkthrough of A/B testing procedure. This 6-step plan is created with a focus on your business goals and conversion rate.

1. Set your goal

Set up your business goal. Analyze customer journey with specific metrics. Find and focus on improving places of real conversion drops that are relevant to your business goals. For example, boost the revenue, by improving search bars or the checkout process that might cause cart abandonment.

There's no better feeling than setting a goal, working toward it, and achieving or even exceeding your expectations. Keep in mind to choose only business-oriented goals.

Find users' pain points & QUICK WINS In less than 2 minutes!

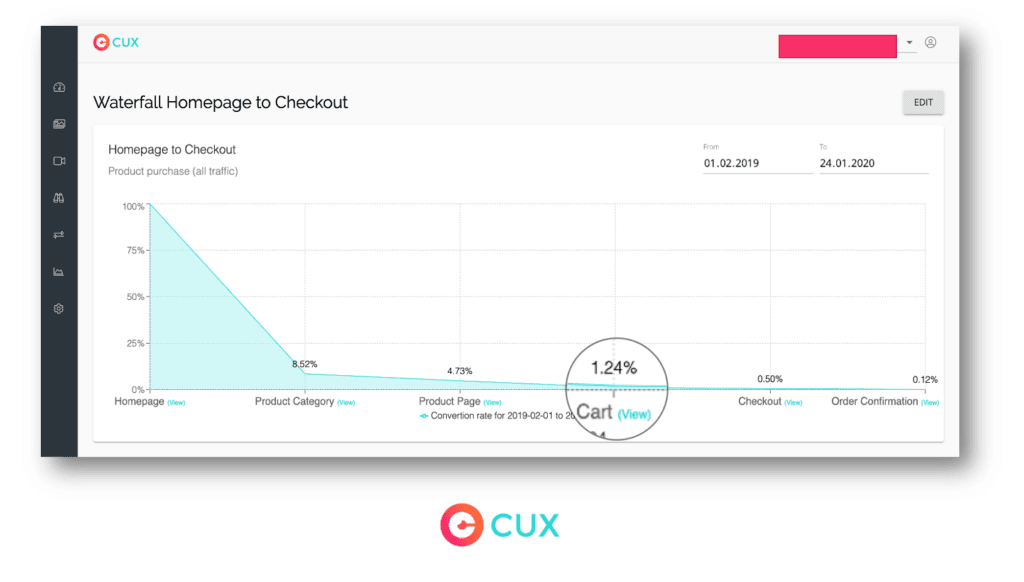

CUX's, Conversion Waterfalls analyze real customer journeys. No need to waste time on pointless optimization anymore. Conversion Waterfalls show real conversion drops and are alternatives to Hotjar’s funnel analysis.

2. Hypothesis Testing

The next step to take is to establish your hypothesis statements. Pick one hypothesis to test. You can test more, but it is easier to check later which version improves website performance. Use data from analytical tools to find out your hypothesis. If you’re a CUX user, you might use Heatmaps, Conversion Waterfall, and Visit Recordings as your Hypothesis source. Always keep in mind, to look at the big picture, of the conversion rate. Small changes on one web page may influence your whole website conversion rate.

3. Design the Experiment

Based on data from the A/B testing tool, design an experiment with your page/app elements that you want to examine. This might refer to swapping the position of a form, changing the color of a button, switching headings, or implementing a video tutorial.

At this point, you also need to pick your goal metric. But also what kind of significant results do you want to achieve. You will always have a clear reference to what you are aiming for.

4. Run the Experiment

The experiment is ready. You just need to wait for users to participate! At this point, your users will be randomly assigned to either the control or treatment group of experience. A/B testing tool will measure all necessary metrics.

There is no time limit for A/B test. Give it as much time as it needs to gather valuable data. Sometimes it can take a week and in other cases months. Mostly it depends on how much traffic a website get. Results will be found faster if your website has a lot of traffic.

5. Analyze data

Before you go with the better-performing result, analyze the gathered data. Make sure that there were no external factors influencing content performance. It can be holidays, economic disruptions, or even your competitors.

A/B testing tool will present the data from the experiment and show you the difference between how the two versions of your page had performed and which one had increased conversion number.

6. Implement a statistically significant version

If one version is statistically better, you have a winner. If not, don’t worry. It means that this experiment doesn’t impact conversion. I recommend you Just leave it the same as it was. But assume you have one statistically better variation, let’s implement it! As they say in gambling, winner, winner chicken dinner!

In both cases, your A/B experiment draws to an end. Now you can start the entire process once again. It's a never-ending story!

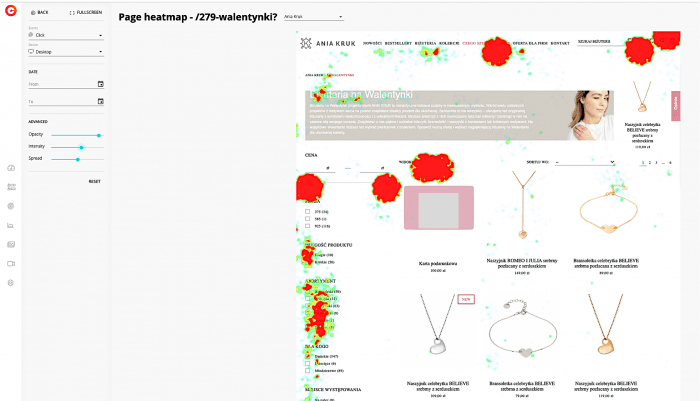

Heatmaps and A/B tests

Heatmaps are a great source of information on users' most significant actions on your pages. They visualize user behavior as movements and frustrations. Combine data from Heatmaps and Visit Recordings to spot missed opportunities for conversion!

That is the reason heatmaps are an ideal source of information on which elements should be subjected to A/B tests. Here you can find 4 ways to increase conversion rate with Heatmaps.

A/B testing examples

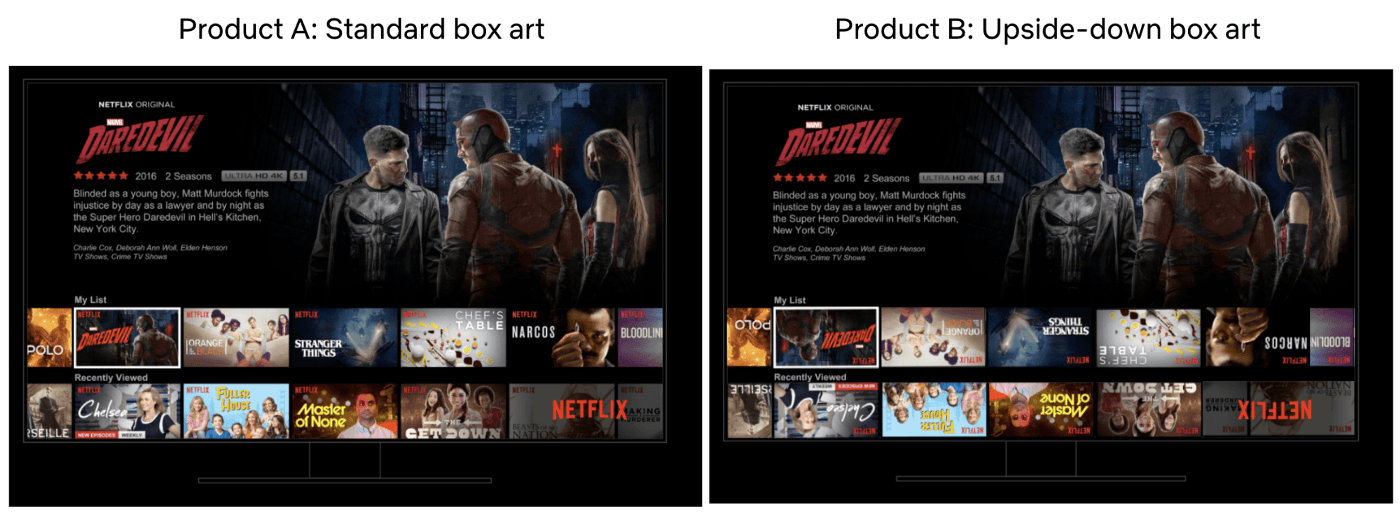

Netflix A/B testing

Even such enterprises as Netflix run A/B tests. They are aware of the importance of a great user experience and cannot afford to lose users by implementing unverified changes. Netflix tests every product change before the final implementation by running A/B test.

Learn more about A/B tests conducted by the biggest worldwide VOD service.

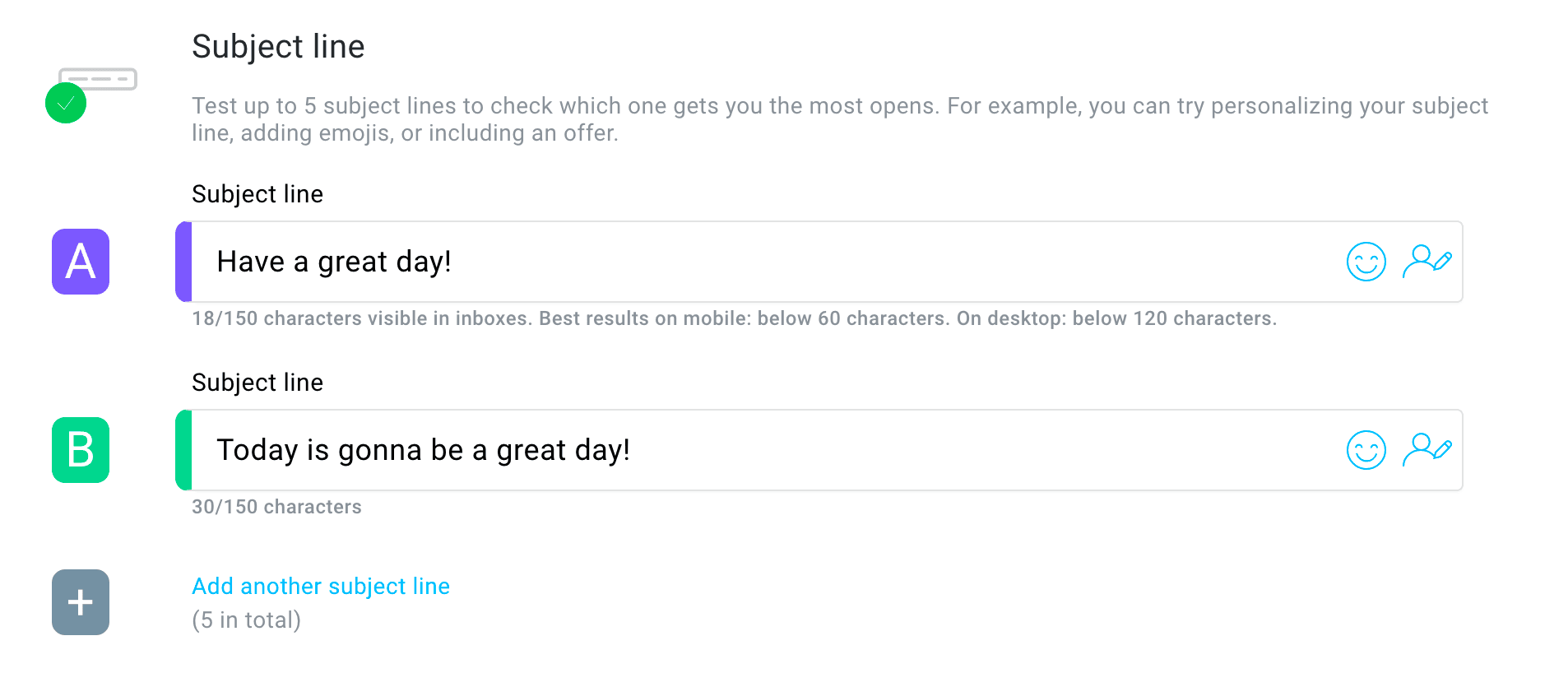

Newsletter A/B Tests provided by GetResponse

A higher conversion rate is desirable in every business. So why restrict yourself to doing only A/B test of websites, or landing pages? You can also run experiments on marketing campaigns, or even newsletters. With apps such as GetResponse you can A/B test elements of email marketing like:

- subject lines,

- content: call to action, headings, video and graphics.

Source: https://www.getresponse.com/

You can divide recipients in half or whatever percentages you like. The first group will participate in the study, the second will be your control group. In accordance with your experiment, they will receive different content versions.

Common mistakes while doing A/B testing

Too small control segment

Valuable A/B testing also known as split testing needs to be run on a certain volume of traffic. When you are doing experiments, you need volume, otherwise, the results won't be reliable. You require at least 1000 records to receive useful conclusions.

Testing too many things at one A/B test

Running numerous A/B tests on a website at the same time makes it difficult to pinpoint which change influenced the results. Easy to get lost. I highly recommend testing each element step-by-step.

Give it some time! Don’t be in such a rush

Let’s assume that after 2 days, you’ll see a huge improvement in conversion rates. You're eager to implement changes immediately. Take a deep breath and wait! The target audience might interact differently every day of the week. If you want a reliable sample, run the test for at least a week. And don't end the test until it receives statistical significance!

For example, Leemay Nassery shared in Test & Code in Python podcast (Episode 100: A/B Testing - Leemay Nassery) her case study where they wanted to test personalized experience on a TV platform. This experiment took a few months as they wanted to if nothing unexpected influenced the results of A/B test.

How long should you run an A/B test?

Sometimes A/B tests can last a week and sometimes stagger even for half a year. It depends on what statistically significant you want to reach.

Conclusion of A/B testing

A/B tests are the most common tool for optimization of acquiring, retaining, and increasing customer value. Split tests compare two variations in order to check, which one of them archives better results.

26/08/2019

14 minutes read

Why to improve conversion with CUX over HotJar or other alternatives?

I cannot count the times I answered this question over the last 2 years. That’s totally understandable.

Read more

18/02/2020

14 minutes read

How to boost your conversion rate using analytics – The T-Mobile Case

Conversion rate – the main metric monitored by every e-commerce manager. When it grows – we celebrate, when it falls – we want to know why.

Read more

8/04/2020

14 minutes read

Does a high conversion rate always mean great customer experience?

Usually, great sales results are an opportunity to celebrate – finally, our efforts have paid off and there is nothing to worry about!

Read more